| |

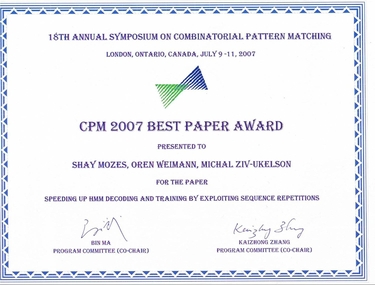

Speeding Up HMM Decoding and Training by Exploiting Sequence Repetitions

This page contains links to the conference and journal versions of this paper, as well as the conference presentation and a couple of implementations

of some of the ideas presented in the paper. The code may be download and used for scholarly and academic purposes, in which case we request that you include a reference to our paper.

Speeding Up HMM Decoding and Training

by Exploiting Sequence Repetitions.

Shay Mozes, Oren Weimann and

Michal Ziv-Ukelson.

In Proceedings of the 18th annual symposium on Combinatorial Pattern Matching (CPM 2007), pages 4-15.

Speeding Up HMM Decoding and Training

by Exploiting Sequence Repetitions.

Yury Lifshits ,

Shay Mozes,

Oren Weimann and

Michal Ziv-Ukelson.

In Algorithmica (2007).

Speeding Up HMM Decoding and Training

by Exploiting Sequence Repetitions.

Yury Lifshits ,

Shay Mozes,

Oren Weimann and

Michal Ziv-Ukelson.

In Algorithmica (2007).

PPT presentation (CPM 2007) PPT presentation (CPM 2007)

Abstract

We present a method to speed up the dynamic program algorithms

used for solving the HMM

decoding and training problems for discrete time-independent HMMs.

We discuss the application of our

method to Viterbi's decoding and training algorithms,

as well as to the forward-backward and Baum-

Welch algorithms.

Our approach is based on identifying repeated substrings in the

observed input

sequence. Initially, we show how to exploit repetitions of all

sufficiently small substrings (this is similar to

the Four Russians method).

Then, we describe four algorithms based alternatively on run

length encoding

(RLE), Lempel-Ziv (LZ78) parsing, grammar-based compression (SLP),

and byte pair encoding (BPE).

Compared to Viterbi's algorithm, we achieve speedups of Theta(log n)

using the Four Russians method,

Omega(r/log r) using RLE, Omega(log n / k) using LZ78,

Omega(r/k) using SLP, and Omega(r) using BPE,

where k is the number

of hidden states, n is the length of the observed sequence and

r is its compression ratio (under each

compression scheme).

Our experimental results demonstrate that our new algorithms

are indeed faster

in practice. We also discuss a parallel implementation of our

algorithms.

Implementations

C++ Implementations of different variants of Viterbi's algorithm

The different C++ implementations were used for producing the results section of the paper. This is not a complete implementation of Viterbi's algorithm.

All variants assume DNA sequences (alphabet size 4) and

only compute the probability of the most probable sequence of

hidden states. Traceback of the optimal sequence itself is not

implemneted, but should be easy to add. The code is not well documented,

but is not very complicated either. I will be happy to try and answer

questions that arise.

viterbi.cpp - classical Viterbi's algorithm

vit_rep.cpp - Viterbi's algorithm using matrices. divides the sequence into words of size b and precomputes all matrices corresponding to all possible words.

trie.cpp ,

trie_sep.cpp ,

trie_dfs.cpp

- Different variants that compute the LZ78 trie and run

Viterbi's algorithm on the compressed sequence (see section 4.3 of the journal version). The difference between the variants is in the way the matrices are computed.

lz_cut.cpp ,

lz_cut2.cpp - Does LZ78 compression, but only uses words that appear enough times (see section 4.4 of the journal version). The

two variants differ in the way they parse the input sequence into just "good"

words.

Multithreaded Java Implementation

I coded this as a final project for a multiprocessor synchronization class.

In the project I implemented many variants of the "Four Russians" version

of the algorithm. Here I did implement the traceback step for recovering

the optimal sequence of hidden states.

The variants differ in the ways and extent to which

parallelization is employed.

See the project report for details.

One of these variants is a reasonable sequential implementation in Java.

It is not as efficient as the DNA-special purpose C++ code,

but not too bad either. The source code can be found here .

|

cs

cs brown

brown